Reviewing the Status telemetry server software, and its future.

Origin

The Status telemetry was originally created to measure message reliability in the Status application.

It attempted to trace messages in the network by (roughly) having:

- Alice sends message id

123over Waku, and report to telemetry server that she sent it. - Bob receives message id

123over Waku, and reports to telemetry server that he received it. - Carol does not receives message id

123over Waku… and does not report it to telemetry service

Telemetry server can then say that we have 50% reliability because Carol did not receive the message.

Yes, this is flawed and simplified continue to read…

Taking over

The Waku team took over the telemetry service in 2024 as the primary objective was to make the usage of Waku reliable by the Status app. Hence, we looked at existing tooling to measure reliability, one of those tools being the telemetry server.

We quickly learned, from discussing with original Status developer and investigating ourselves, that the complexity of what the telemetry server attempts to do, makes its own reliability… poor.

Indeed, Carol not reporting that she did not see the message does not tell us much:

- Maybe Carol was offline and never came back online

- Maybe she did receive the message but could not report it to the telemetry server

- etc

Which then implies further complexity in the logic to be able to infer whether or not Carol should have received the message.

So instead, we slowly simplified the reported metrics. So we could learn about message reliability, even if the telemetry service was itself unreliable [1].

Successes

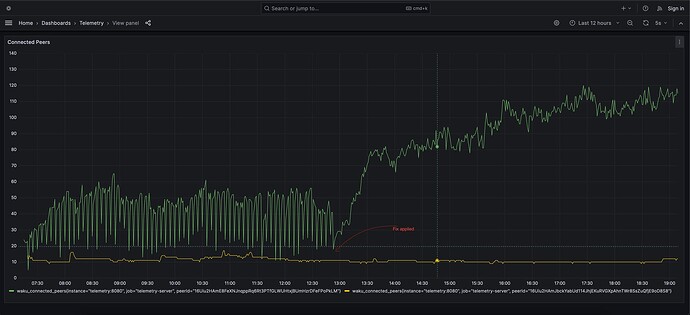

The telemetry service allowed us to improve reliability in the Status app, by collecting and reviewing data around connectivity: number of peers connected, peers discovered, connection failures, etc (I invite the Waku team to provide some examples).

Local (dev) vs Global (users)

The aim of the telemetry service was to collect data from various users, so we could draw conclusions on how well, in general, Status and Waku behave.

However, most lessons came from Waku CCs running the application locally and looking at the behaviour of their own instance, over telemetry.

Current needs

Thanks to the work done in 2024, we reached a stable point on the Status app in terms of peer connectivity, discovery, and usage of Waku protocols. As well as stabilizing e2e reliability for one-to-one messages (MVDS) [2].

A major remaining point is e2e reliability for Communities (SDS), we have defined a protocol [3] and are at the last stage of building a nim library [4]: writing the C API [5] to integrate it in Status app.

The next step is reviewing in more details the existing chat protocol, in terms of message rate and bandwidth. Indeed, if we want Status to scale, we need to ensure chat protocols scale [6]. To do so, we need to ensure that the number of messages produced by each user is limited, this then allows us to better understand how network bandwidth usage grows with the number of users (beyond current modelling) and how to apply RLN effectively.

Hence, the next phase is bandwidth and message rate analysis [7]. For this, we do not need aggregation of metrics across users. Instead, we need to look at protocol behavior in various scenarios (part of a community, sending or receiving messages, etc).

Prometheus

To achieve the current needs, with only local view of protocol behavior needed, Prometheus is a satisfying solution as previously described in [7].

We do not need a remote server to aggregate data. We can use off-the-shell technology (Prometheus and Grafana).

It would be fair for Waku CCs to run a local Grafana instance in docker, with pre-defined dashboards to look at message rate and bandwidth usage of a Status instance in various run conditions.

Telemetry Server Future

In terms of immediate work, there is no need to do further improvement on the telemetry server.

The only piece of information I am not sure we wrapped up is whether hole punching works for users. We started to track connection dial failures, but we don’t believe enough users have Waku telemetry enabled to have significant learnings.

In the future, we may need to learn about specific user application behaviour from a Waku PoV. However, because the telemetry server is bespoke code, the next person working on it will have similar learning curve that we faced when taking over it this year.

Moreover, the telemetry server extensibility is costly. When adding new metrics, a new table needs to be added to the instance running. Prometheus is much more flexible as the software just need to start reporting to get those metrics available.

Hence, sunsetting the telemetry server and preferring using off-the-shelf solutions such as Grafana and Prometheus seems preferable in terms of potential future effort.

Some work would be necessary to aggregate data from users’ Prometheus on a single instance. But this could be done from scratch when the needs raises

Proposal

Hence, the following proposal:

- Convert existing, useful, telemetry measurements in status-go to Prometheus, ensure that the go-waku ones are also present in nwaku.

- Sunset the telemetry service in favour of local metrics, including adding a toggle to enable Prometheus metrics in desktop and mobile apps, disabled by default (this can be further discussed in [7]).

- Prefer DST large scale simulations over collecting user metrics to confirm reliability or other Waku / Status behaviours over measuring in users’ apps. Which is also better for privacy.

- If the need arises, probably from specific user feedback/issue, that we need to aggregate network metrics from user application; build a solution that aggregate Prometheus metrics instead of using telemetry server.

Call to feedback

Please provide your opinion and expertise to this matter.

References

- [1] [Deliverable] Telemetry: direct message reliability · Issue #182 · waku-org/pm · GitHub

- [2] [Deliverable] Review MVDS usage and fail path · Issue #189 · waku-org/pm · GitHub

- [3] rfc-index/vac/raw/sds.md at main · vacp2p/rfc-index · GitHub

- [4] 2024-12-02 Waku Weekly

- [5] API Specification for End-to-end Reliability

- [6] Proposal on how to manage inactive users in a community - Communities - Status.app

- [7] Prometheus, REST and FFI: Using APIs as common language - #8 by gabrielmer