We have been working on integrating libp2p mix protocol into Waku especially beginning with lightpush protocol as per the raw Waku-Mix spec.

So far the request path for lightpush protocol has been integrated successfully and as part of validating the same some simulations have been performed. The reply part has not been integrated and will be done once Single Use Reply Block’s are implemented in mix.

Just jotting down the results and observations of the simulations here.

All the simulations have been performed using waku-simulator a tool that can be used to simulate a test network.

Simulation Details

A 21 node nwaku+mix cluster is run in the simulation along with one or two lightpush publishers. Each publisher would publish 10K messages in total with an interval of 100ms between each message.

Some metrics have been added to mix protocol in order to observe and monitor the simulation and results.

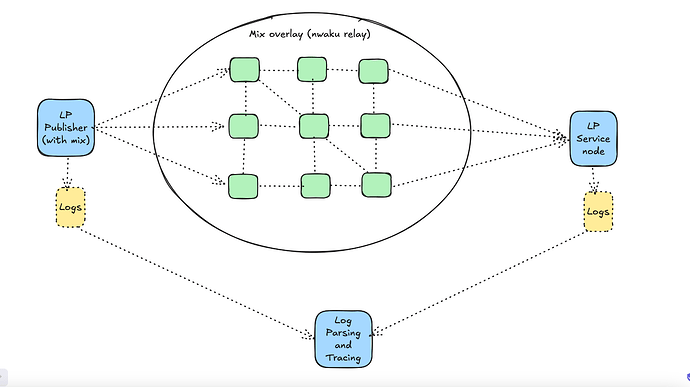

Logs were captured from publisher and lightpush-service-node to be used for analysis to derive latency using Vac-DST Teams log analyzer tool.

Below is a diagrammatic representation of the setup.

Mix protocol parameters used the runs :

- Path-length

L=3(default) - delay added by each mix node is randomly chosen between

1-3ms(default) - mix payload size (

4400 bytes) - Note that this has been chosen to match avg msg-Size in status

Note that the publishers would wait until their local mix-node-pool reaches 21 i.e complete size of mix nodes in the network before they start publishing.

Since one of the lightpush service node’s would be chosen as destination effectively only 20 mix nodes are available for publishers.

Simulation Results

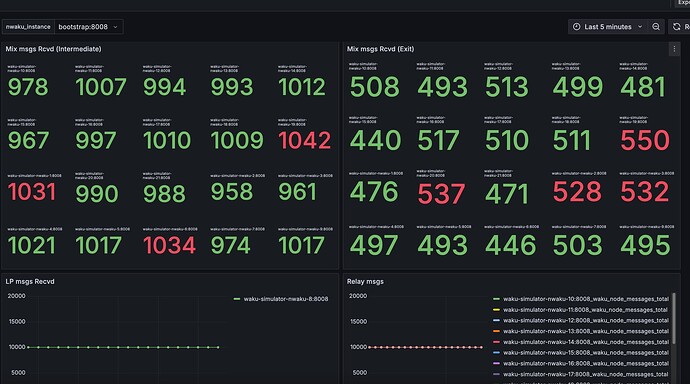

Below is a screenshot of metrics from grafana which shows how many messages where processed by each node (acting as intermediary or exit node). It also shows overall lightpush and relay messages in the network.

Same test was rerun with 2 publishers as well and an important thing to note is that the distribution of messages to each mix node is almost uniform (which shows that traffic through mix network is going to be more balanced if each publisher chooses randomly the nodes from same pool).

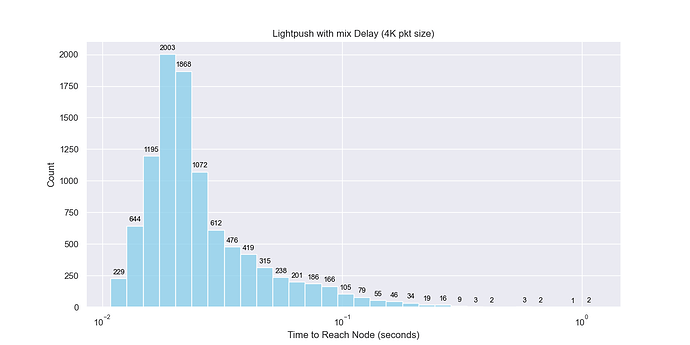

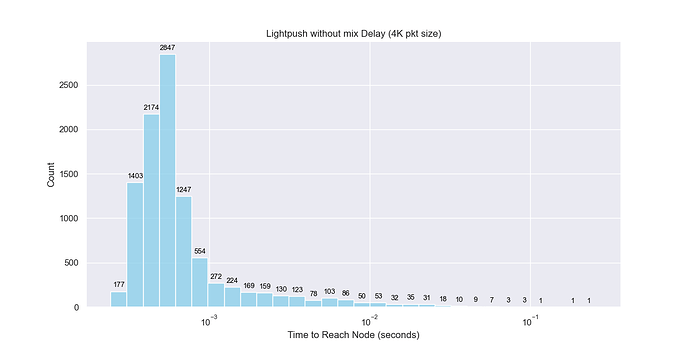

To identify the delay noticed due to introduction of mix in the lightpush path 2 simulations have been run where mix is enabled/used in first and simple lightpush without mix is used in the second. Below are the graphs showing the delay before message is published at lightpush(at the client) and after message is received by lightpush protocol(on service-node). Note that this doesn’t involve actual processing delay of lightpush message by the service-node.

From the graphs delay/latency

- without using mix is between

0.1 - 10 mswith majority less than 1 ms - with mix is

10-100mswith majority around40ms.

Note that in a real network scenario the network latency between mix nodes in the path would increase the latency further.