In this post, I intend to initiate a discussion about the planning and scheduling of work. My goal is to gain a deeper understanding of the expectations related to project management in Logos overall. Additionally, I seek to determine whether the approach I am suggesting for Waku is both practical and aligned with these expectations.

I also plan to dive into topics raised by the Waku team, such as distinguishing between critical and non-critical work, milestone completion, and the relationship with multi-client implementation.

Milestone Concept

Regarding the Milestone Concept, following discussions with various leadership and program members of Logos, it has become clear that the introduction of a more rigorous milestone and budget-per-milestone approach is aimed at establishing a tighter link between spending and delivery.

Here’s a summary of how this approach was presented to me:

- We define a milestone to deliver feature X.

- Work is planned and executed across all functions (e.g., research, development, QA, Docs) to deliver an MVP of feature X.

- This work is still broken down into Epics.

- After delivering the MVP of feature X, the impact of the feature is measured across various metrics (user adoption, user feedback, etc.).

- Based on cost and metrics, stakeholders can make informed decisions on whether to:

- Continue investing in feature X beyond MVP and start adding more functionality.

- Abandon feature X due to poor ROI.

From my perspective, this approach resembles the Shape Up process from Basecamp, where feature building is viewed as a “bet” with a specified “appetite.”

The advantages of this approach include:

- Clearly defining the appetite (time and effort) for a deliverable in relation to possible ROI, rather than working within an unbounded time limit.

- Explicitly reviewing a bet (deliverable, MVP/PoC, small feature set) and evaluating whether it’s a win (build more) or a loss (drop the feature), instead of risking a large bet (full feature set unpopular with users).

However, it does come with downsides:

- The overall delivery time for a full feature set may be extended, as checkpoints are necessary to evaluate the impact/ROI of each bet/deliverable. This means the next deliverable should not be started until the previous one is evaluated.

- There is an increased project management overhead; each milestone needs to be estimated and planned to enable measuring ROI and appetite.

Defining a Milestone

Regarding the definition of a Milestone, I suggest the following criteria:

- Clear User Benefit: The milestone should aim to provide a distinct benefit or feature to the user, whether they are end users, operators, developers, etc.

- Minimal Scope: The milestone should be trimmed to a minimal scope, encompassing only what is “just enough” to assess the potential impact of these features on the project’s metrics (e.g. number of users, revenue).

- Attached Estimate: An estimate should be associated with the milestone to facilitate the measurement of potential ROI. Additionally, tracking the estimate versus the actual progress is crucial for identifying any deviation and making informed decisions (e.g., deciding whether to continue if the estimate doubles as work progresses).

Implementation in Waku

Regarding the current Waku milestones, they collectively represent a set of work and features aimed at achieving specific outcomes, such as ensuring scalability, launching a network with built-in DOS protection and autoscaling.

These milestones are further divided into epics. For The Waku Network Gen0, epics are organized into tracks.

However, when considering the outlined Milestone Concept, it becomes apparent that the defined milestones are notably extensive:

- The appetite spans over 6 months for the entire team.

- Encompasses a multitude of features.

This structure lacks the proposed granularity. Conversely, some of the bets/scopes in the milestones were abandoned or added as the team gained more insights, including certain sub-epics. This means a lack of control on a specific, well scoped output. As larger the work is, more likely the scope may change (whether it’s creep or cut) and ROI difficult to measure (which specific feature drives the ROI up?).

Waku Work Cycle

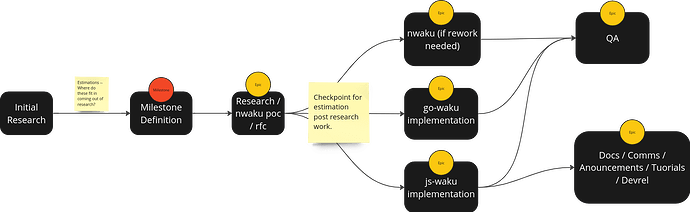

The work cycles observed in Waku can be broadly categorized into two main streams:

Research (add/edit protocol):

- Establishing the problem statement.

- Gathering potential solutions.

- Defining the chosen solution.

- Implementing a Proof of Concept (PoC) in nwaku.

- Running simulations to confirm behavior.

- Drafting a RFC.

- Engaging in review cycles.

- Merging the RFC and nwaku PoC.

- Refining/optimizing nwaku code.

- Implementing the solution in go-waku.

- Implementing the solution in js-waku.

- Considering possible protocol/RFC revisions.

- Updating documentation if necessary

(pure) Engineering:

- Defining the problem statement.

- Exploring possible solutions.

- Implementing the engineered solution via a PR.

- Participating in a review cycle for the PR.

- Merging the PR.

- Updating documentation if necessary.

Presently, the QA effort operates independently and is not strictly integrated into the development loop, catching up on existing protocols.

It’s important to note that the eco dev work cycle is more intricate and involves:

- Identifying opportunities (talks, events, workshops).

- Organizing events, negotiating with organizers, or co-funding side events.

- Managing the Business Development (BD) pipeline, generating and converting leads.

- Providing support to existing users.

- Handling social media coverage and content strategy.

Eco dev work is considered outside of the scope of this discussion.

Considerations

Minimal Scope for Research

An open question is what can qualify as a minimal scope when research is needed.

Research can take several months in itself and often, would be larger bet than pure engineering.

It would make sense to aim for research milestones to stop at step 8: RFC merged, nwaku PoC merged.

In this instance, we can attempt to measure the RFC with:

- operator adoption

- developer/project interested best of the RFC.

However, this might provide only light feedback. Strong relations with stakeholder are needed to ensure feedback is collected regarding the properties of the delivered protocol.

Only once the PoC is available in js-waku (and bindings) do we get true feedback from developers (e.g. bounties, hackathons). Adding both js-waku (and bindings) and docs steps to the milestone.

Moreover, having separate milestone for research (PoC), operators (nwaku productionized) and hackers (js-waku) is likely to lead in an explosion of milestones.

Finally, do we really want to advertise a feature if it is not documented and available to both developers and operators?

Implementation Across Clients

This brings the consideration of implementation across the various clients, and whether none, some or all milestone (whether it is research or engineers) must include the implementation across all clients as pat of the deliverable.

Do note that each clients have a specific scope. For example, discv5 is not implemented in js-waku on purpose. In any case, careful consideration on whether work is to be done in a client should be done (as highlighted in this post and this comment).

Epics vs Milestone

Finally, if a milestone is a the minimal amount of work needed to have a PoC or MVP level feature, then how can epics further subdivide the work?

One way do it on a team/activity basis, e.g.:

- research

- nwaku

- js-waku

- docs

- QA

- etc

Pros and cons:

- pro: research is likely to apply across repos (nwaku, research, Vac/RFC), a common epic label can help track the work across repos. Same for QA

- con: However, docs and client implementation are mono repo, making a specific epic label not as useful.

- pro: Force to think about client implementation and QA component for each milestone.

- con: One milestone may take several months to complete, but each sub-team can have a feeling of completion once their epic is done.

- pro: A milestone is considered done once implemented across relevant clients, meaning getting true feedback and limiting number of milestones.

Proposed implementation

Proposal to organise Waku work:

Milestones delivers MVP feature ready for operators and developers to use

Epics are subteam related:

E:Research ...research to design protocol + RFC changes + PoC in nwaku + simulations (should we separate?). May not be relevant if engineering only epic.E:nwaku ...may not be relevant if no handover to nwaku engineer is neededE:go-waku ...E:js-waku ...E:QA ...Forces to plan for QA workE:Docs ...include dev, operator docs and potential examples, video tutorial, etc

A review of the milestone can be done once RFC is done. Close collaboration with stakeholders should be done before full delivery of milestone. For example, if the protocol’s properties, when defined and measured, are not satisfactory then the bet could be considered lost before work in js-waku/go-waku is done.

Because of the size of the work, then each epic is likely to need a start and due date, to ensure we are on track on budget and timeline.

Strict PM process are needed, with review cycles to ensure that epics and milestones are on schedule, deliverables’ impact are assessed, estimation are done and retrospect’d and work is schedule in the pipeline.

This also needs to happen with the flexibility of being able to drop, shelf, and re-prioritize milestones, according to the expected or actual impact and ROI.

Finally, each subteam has a clear start/end and closure for their epic. Meaning that even if the milestone per se is still open, a subteam can keep a clean slate and only track their ongoing epics. BU Lead and PM own the milestones.

Case Studies

Research to MVP

Using # Waku Research - Post Gen 0 Milestones and Epics as a (simplified) research study case:

Milestone: TWN Store Upgrade (SU)

Goal: After this upgrade, the network will provide distributed and synchronised store services

The milestone would be implementation of propose protocols in all clients.

Some clarification maybe required in terms of distributed store vs store synchronization and whether can one happen without the other and what is the appetite/priority of either.

Should there be 2 milestones? one for distributed store and one for store synchronization? Assuming 2 milestones does not make sense, we have one milestone for this work.

Milestone: Distributed and synchronized store services

Epics:

-

E:Research store message hashes -

E:Reserarch store synchronization -

E:nwaku store message hashes(assuming possible index/DB optimization needed) -

E:nwaku store synchronization(ditto) -

E:go-waku store message hashes -

E:go-waku store synchronization -

E:js-waku store message hashes -

E:js-waku store synchronization -

E:QA store message hashes -

E:QA store synchronization -

E:Docs store message hashes(maybe only one epic for docs? this could contain a blogpost about the feature and how to use it for both operators and developers) -

E:Docs store synchronization -

Should QA be its own epic? Seems fair as done by a different team

-

Do we need the dupe everywhere? Should we have one epic per subteam? Depends on level of granularity

-

Should we estimate work per epic?

Small Engineering

Now, # [Epic] 4.1: Basic front end for node operator, as an engineering case study:

Provide a simple and non-technical means for users to interact with Waku nodes, enhancing usability, especially for workshops and new users.

Output is clear. A MVP FE to enable interacting with a locally running nwaku node. This seems to land itself well to define a milestone instead of an epic.

The work of this milestone would be:

- developing the front end

- adjusting nwaku REST API end points to enable the front end

- incorporating FE in nwaku-compose

1 js-waku engineer would need to spend most time on this, with the assistance of a nwaku engineer if REST APi endpoints need to be updated.

It does not really seem worth splitting this work in an epic.

Could this then be a “milestone-epic”?

Do we want?

E:nwaku basic operator front endE:web basic operator front end(not js-waku per se but we are using js-waku’s engineers skills)E:QA basic operator front endGood point here in terms of defining QA work.

Or are we overengineering the project management in terms?

Mix Research/Engineering

RLN in resource-restricted clients

This is a mix research and engineering issue.

It would land itself well in terms of milestone: making RLN usable in the browser.

Scope could be limited to browser, handling mobile in a different milestone.

In terms of epic split, because there are a number of proposed mitigations. Each mitigation could be its own epic:

E:js-waku optimize download of RLN WASM file- i.

E:Research storing RLN tree onchainii.E:js-waku storing RLN tree onchain - i.

E:nwaku enabling RLN tree download from a serviceii.E:js-waku enabling RLN tree download from a service

However, the milestone may be complete with only (1) and (3). With the concept of bet, we may want to bet on one approach first (1) + (3) before starting work on a different approach (2).

In this instance, the epic concept seems unnecessary.

Engineering

[Epic] Enhance light push protocol

This is a potential “follow-up” milestone. The light push protocol was released and proven useful to web and mobile application, with frequent usage by any web developers and recent integration in Status Mobile.

Developers have reported that the error handling is not ideal and more guidance is necessary, hence enhancing the protocol seems a safe bet. Cost is low as error handling can be inspired from filter.

Epic break out:

E:nwaku enhance light push protocolassuming this includes the RFC work and proto file updateE:go-waku enhance light push protocolE:js-waku enhance light push protocolE:QA enhance light push protocolE:Docs enhance light push protocol

The work for this change is quite minimal but we do end up with one Epic per CC (assuming only one CC per subteam need to do the work).

Each activity is likely to be tracked with a single issue each, and a few PRs.

Again, the usage of several an epic labels seems to be overkill in this instance.

Critical Work and Closure

This approach addresses the concerns of identifying critical vs non-critical work.

Only critical work should be included for a milestone. Non-critical features should be left for future milestones, if we decide to continue investing on a specific functionality.

The other topic was around closure. Currently with Gen 0 milestone, some epics remain open even if the feature is available to the network (implemented in nwaku) because it’s not yet in js-waku.

With the proposed approach, the completion per subteam matches an epic: once the epic’s subteam is done, the subteam can have a sense of completion even if the milestone is still open.

Conclusion

From the case studies, it seems that the proposed approach for milestone split works for larger work of body that includes research and needs to happen across all/most clients.

However, for simpler features, the concept of epic seems useless and unnecesseraly increasing project management with a high number of milestone.

An alternative is to bundle work in milestones, as done for Gen 0. But this defeat the purpose of having budget per milestone are bundled milestones are likely to experience several scope change over time.

I am keen to hear from Logos leadership and other BU from their experience and insight to better understand the expectations and practices.