The biggest lessons for Waku in 2023 was the fact that we gave raw protocols to users, whether they be Status, hackathon developers or other projects.

There was, and still is, a strong will for Waku to build agnostic technology. Not only we do FOSS, but we do in a way that is really Free Software.

This sometimes gets in a way of providing good abstraction and good defaults.

We corrected this in 2024 by owning the peer-to-peer reliability protocol [1] and implementation. It is now integrated in Status applications, which are much more reliable (one missing block, end-to-end reliability [2], is soon to be completed).

The final step to this is defining a deliberate API for users to consume Waku, the Messaging API [3].

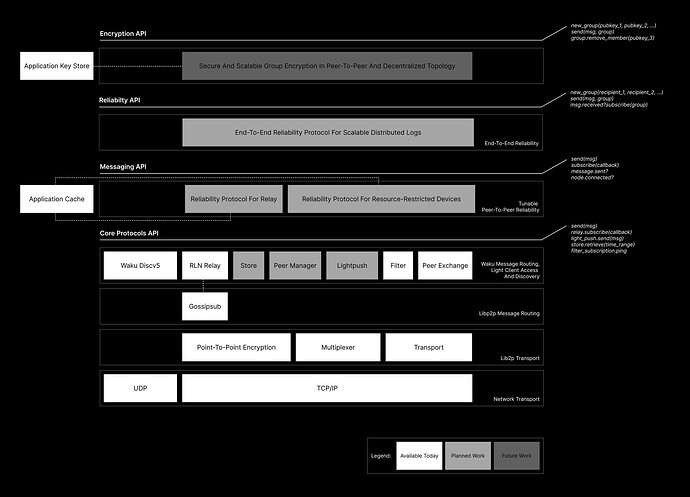

I often refer to the image below to describe the target layers of the Waku SDK (taken from Message Reliability and Waku API blog article [4]).

The work for the Messaging API has started [Sasha’s PR].

The Reliability API is next. The end-to-end reliability protocol, Scalable Data Sync (SDS), is near completion. The js-waku team also implemented it and is already thinking how such a Reliability API would look like.

Waku Store Dichotomy

The role of the Waku Store protocol in the Waku stack is controversial in Waku’s history. I actually suggested to renaming to Waku Cache a few years back, but I guess it’s all already crystallised.

The controversy comes from the dichotomy of this protocol:

- It exists to enable mostly-offline devices to retrieve past and missed messages

- But it is not a decentralized data storage solution; this is not Waku’s domain problem, it is Codex’s.

A Waku protocol stack without Store would mean frequent re-transmissions, at the cost of bandwidth usage and latency performance. This is already what is done with MVDS [5] in Status. But this has implication on rate limit, and until RLN is properly integrated as a foundation to avoid excessive bandwidth usage, it would be unwise to push for more retransmission mechanisms.

Moreover, Waku, and Waku Store, have been used as solutions for several problem the Status app faced in terms of data durability. It is used to back up user settings, hold 30 days of Community history, and unbounded Community member lists.

The unrestricted use of Waku Store, and the absence of effective rate limits, ultimately led to the very issues that Waku v2 aimed to address, including excessive bandwidth consumption, mobile data drain, and battery depletion.

In terms of Store usage, the question is: for how long should we expect messages to be present in store nodes?

However, most applications cannot answer this question as they lack rate limiting. Once RLN is in place, then the following criteria can be considered to answer the question:

- Amount of data: message rate limit, maximum message size, number of shards, number of users and user pattern (statistical distributions) are needed to evaluate the amount of data an application produces.

- Decentralization: what are reasonable database sizes to enable the desired level of decentralization and performance?

From a user and product point of view:

- What messages need to be persisted?

- How often do we expect users to open the app?

- What is the friction-to-inactivity ratio? If a user hasn’t open the app for a year, it may be fine to expect them to re-setup a login; within a day, it may be expected that all messages get loaded without friction; and in-between, added latency from retrieving data from Codex or BitTorrent.

Waku Store and Messaging API

The Waku Messaging API is here to provide an opinionated way to use the Waku protocols. Opinionated so it can provide fair out-of-the-box reliability, for both relay and edge nodes [6], packaged in a simple API that provides a minimum learning curve for new developers.

Good default parameters are also important, done in a lean manner; not everything needs to be configurable. We can define four level of configurability:

- Developer must decide the parameter value every time

- A good default is provided, but the developer can change the value

- No configuration is possible, the developer is welcome to use lower-level APIs

- Lower-level APIs will not allow this, the developer can fork.

I believe a critical opinion we must uphold, for the scalability, performance, and reliability of Waku, is that Waku Store is not a decentralized storage solution.

Hence, I strongly suggest we do not include direct access to Waku Store in the Messaging API.

Instead, only have indirect access to Waku Store, as described in the peer-to-peer reliability spec [1]:

- Periodic store queries: When

subscribe-ing to a content topic, period store queries can be kicked off/include this content topic. - Connection loss detection: When a connection loss is detected (network, or app shut down), a store query can be triggered, as currently implemented in go-waku api.

- Message confirmation: when

sending a message, a hash store query is done to check if a remote store node received it.

The Reliability API is also expected to provide indirect usage of store, to retrieve messages detected as missing by SDS.

status-go integration

Such an API will be better guide to developers, so they use Waku in an efficient manner. Meaning in a way that does enable censorship-resistant properties; large centralized databases do not. And push them towards more adapted solutions (Codex) for data durability needs.

Th approach will also enable us to easily spot bad smells in Status usage of Waku; not that we are not already aware of them.

One of the steps will be to ensure that the Messaging API, and only this API, is used in status-go. As a way to dogfood it and ensure the cleanest-ever Status/Waku boundary (and even cleaner once Reliability API is used).

status-go uses Waku for many things, and we will have to first focus on ensuring that this API is used by the Chat SDK (one-to-one chat and private groups only). As we improve chat protocols, the limitation of the API will ensure that we go in a sustainable direction.

Some obvious bad smell will appear from that, such as large store queries when setting up a profile. Fixing those would be easy to articulate as “the Messaging API must be used”, without having to re-state all the underlying reasons why (store is not an decentralized storage protocol).

And who knows, with store abstracted away, we might be able to finally rename it.