This document outlines the path towards a minimal viable (MVP) implementation of service incentivization in Waku.

Note: Although a protocol is not a product per se, we will use a familiar acronym MVP (“minimal viable product”) for the proposed initial implementation of an incentivized light protocol.

Background

The Waku network consists of nodes running RLN Relay. Such nodes may also run Waku light protocols and expose the corresponding services to clients. In the context of a light protocol, the node that provides the service is referred to as a server or a service node, and the node that consumes the service is referred to as a client or a light node.

Note: Contrary to traditional web services, servers in the context of this work should be thought of as access points to a service provided by a decentralized network (Waku) rather than owners of such service. This document uses the terms server and service node interchangeably.

Waku aims for economic sustainability, which implies that clients pay servers for their services. Currently, no such payment scheme is implemented. This document drafts a roadmap towards service incentivization in Waku, in particular:

- lists design choices relevant for the task at hand;

- proposes an initial architecture suitable for a simplified yet functioning MVP implementation;

- outlines directions for future work.

The MVP will be used exclusively for testnet purposes. There are no plans to integrate the MVP into the Status app. The aim is to develop a simple application (possibly web-based) that utilizes the MVP, enabling internal testing and feedback collection. That should help us identify the key friction points that affect users most, allowing us to prioritize post-MVP improvements.

Defining the Scope

Let us define the scope of service incentivization in the context of Waku as a whole.

We can divide Waku protocols into three areas:

- the interaction among RLN Relay nodes and the RLN smart contract;

- the interaction between a service node and light clients (the topic of this document);

- the interaction between a Waku-based project (like Status) and its end-users.

This document focuses on the interaction between service nodes and light nodes. Communication between RLN Relay nodes, RLN provision for service nodes, and projects’ interactions with their end-users, are out of scope.

Choosing Lightpush for Protocol Incentivization MVP

We propose using Lightpush with RLNaaS as the first service to incentivize.

Lightpush is a light protocol that allows clients to send messages to the Waku network without running RLN Relay. Instead, a client asks a server to send a message to the network on its behalf. The server attaches its RLN proof to the client’s message (aka RLNaaS, or proofs-as-a-service) and sends the message to the network. Note that the client not only consumes computational and bandwidth resources of a server, but also part of its RLN rate limit.

Lightpush is convenient for an incentivization MVP mainly because it can be cleanly separated into request-response pairs. This makes it more suitable for a simple one-transaction-per-request payment scheme (see below). In contrast, other light protocols (Filter and Store) imply that the server, in general, sends back multiple responses for one request.

Architecture Overview

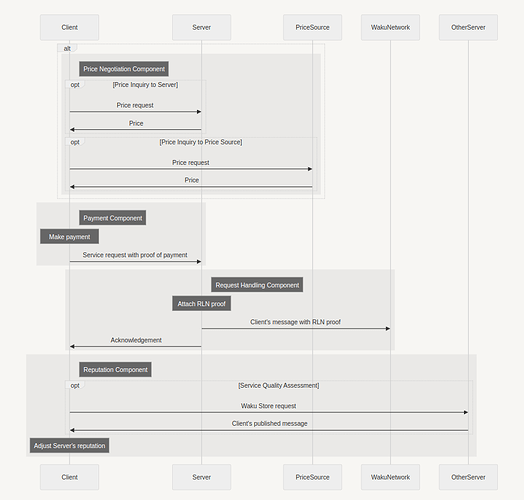

The incentivized Lightpush MVP contains the following components:

- Price Negotiation: the client learns how much to pay for the service it needs;

- Payment: the client pays, generates a proof of payment, and sends the proof alongside its request to the server;

- Request Handling: the server handles the request;

- Reputation (including Service Quality Assessment): the client assesses the quality of service and adjusts the server’s reputation.

The overall architecture is shown in the following diagram:

Source code (Mermaid):

sequenceDiagram

participant Client

participant Server

participant PriceSource

participant WakuNetwork

participant OtherServer

alt

rect rgba(0, 0, 0, 0.05)

Note right of Client: Price Negotiation Component

opt Price Inquiry to Server

Client->>Server: Price request

Server->>Client: Price

end

opt Price Inquiry to Price Source

Client->>PriceSource: Price request

PriceSource->>Client: Price

end

end

end

rect rgba(0, 0, 0, 0.05)

Note right of Client: Payment Component

Note over Client: Make payment

Client->>Server: Service request with proof of payment

end

rect rgba(0, 0, 0, 0.05)

Note right of Server: Request Handling Component

Note over Server: Attach RLN proof

Server->>WakuNetwork: Client's message with RLN proof

Server->>Client: Acknowledgement

end

rect rgba(0, 0, 0, 0.05)

Note right of Client: Reputation Component

opt Service Quality Assessment

Client->>OtherServer: Waku Store request

OtherServer->>Client: Client's published message

end

Note over Client: Adjust Server's reputation

end

The following sections go into more detail for each of the components.

Price Negotiation

We suggest a take-it-or-leave-it approach to price negotiation.

The server announces the price, and the client either accepts or rejects it. The server also decides which tokens it accepts. This scheme is simple and also quite similar to how most purchases are made in the B2C world: customers generally don’t negotiate prices with service providers.

The server may publish its prices off-band, e.g., on its website. The client then can look up the prices and pay the correct amount right away without a price request. This option assumes that the client knows the server’s payment address.

Payment

Within the Payment component, the following design decisions must be made:

- Which network is used as payment rails?

- Does the client pay before or after the service is delivered?

- What does the client provide as proof of payment?

- Does the client pay for every request, or pre-pay for multiple requests at once?

Payment Rails

We propose using an EVM-based L2 network (possibly Status Network, subject to its development timeline) as payment rails.

Potential options for payment rails include L1, L2, or off-band fiat payments. The latter is less preferable, as we strive for decentralization in general. L1 payments, generally, exhibit higher latencies and higher costs, albeit with stronger security guarantees. Low latency is important for UX, especially in the context of one-transaction-per-request payment scheme (see below). Overall, as we deal with relatively low-value transactions, the trade-off that L2s provide looks acceptable.

Pre-payment or Post-payment

We propose using a pre-payment scheme.

The main reason to use pre-payment rather than post-payment is to protect the server. Server’s security risks are two-fold:

- The client consumes more resources than they pay for;

- The client issues too many requests.

Pre-payment addresses both risks with economic means. A prepaid client can’t consume more service than they paid for and is discouraged from sending too many requests.

Proof of Payment

We propose using a transaction ID as proof of payment.

The steps related to proof of payment are similar on L1 and L2:

- The client sends a transaction to the server’s address;

- The client receives a transaction ID (a transaction hash) from the network used as payment rails;

- The client attaches the transaction ID to its request as proof of payment;

- The server looks up the client’s transaction to ensure that it is confirmed.

Implementation details for proof of payment depend on the architecture of a particular (L2) network chosen as payment rails.

Payment Frequency

We propose a one-transaction-per-request payment scheme: the client pays for each request separately.

The alternative is bulk payments, where a client pre-pays for multiple future requests with one transaction. Bulk payments amortize transaction costs over multiple requests. The drawback of bulk payments is that a client assumes the risk that the server would keep the money but fail to provide the services. This risk can be mitigated in one of two ways:

- build trust between the client and a server, which likely requires a robust reputation system;

- establish atomicity between service delivery and payment (so that the server cannot claim the payment without providing the service), which requires additional cryptographic schemes and is only applicable to provable services (see below).

We conclude that bulk payments are better suited for later development stages, whereas the traditional drawbacks of one-transaction-per-request scheme (latency and transaction cost), typical for L1 deployments, will be alleviated on L2.

The question of whether such payment scheme would induce too much stress on the payment rails is left for future analysis.

Request Handling

The server handles requests as it does currently in the non-incentivized version of the Lightpush protocol.

Note that the Waku network doesn’t provide an acknowledgement to the server.

To check whether the message has published, nodes may use the Store protocol. In the context of this work, we assign this responsibility to the client.

Reputation

We propose a local reputation system to punish bad servers and increase client’s security in the long run.

A client initially assigns 0 reputation score to all servers. After each request, the client performs Service Quality Assessment, and decreases the server’s reputation score if the service quality was unsatisfactory. If the service was of good quality, the client increases the server’s score. The decision on how much to increase and decrease the reputation is up to the clients.

Local reputation scoring does not solve the issue with client security completely. Malicious servers can accept payment from a new client and fail to provide the service, however:

- the client’s monetary loss is minimal with one-transaction-per-request payment scheme;

- over time, the client accumulates knowledge about servers’ reputation;

- trust-based third-party scoreboards (akin to L2Beat or 1ML) can be used as a supplementary reputation mechanism.

Service Quality Assessment

Service Quality Assessment is an essential part of a reputation scheme. To decide on reputation change for a server, the client must evaluate the quality of the service provided. In the context of Lightpush, the client should check whether the message has been broadcast by making a Filter Store request to another server.

Store is a Waku light protocol that allows a client to query a server for a message that was previously relayed in the Waku network. Note that Store will also be incentivized in the future. Until Store incentivization is rolled out, service quality assessment for Lightpush is free (at least in monetary terms).

The client may also use other methods for assessing service quality.

Post-MVP roadmap

A generalized service marketplace is the medium to long-term vision for Waku client incentivization. As first post-MVP steps, incentivization will be extended to other Waku protocols like Store and Filter. Ultimately, an open market for arbitrary services will be established, using Waku as a plug-and-play component for service provision and payment.

The rest of this section lists potential directions for post-MVP development related to service incentivization.

Server and Price Discovery

In the MVP, server discovery and price discovery happen off-band. Post-MVP, more decentralized methods will be considered. For instance, server announcements (along with provided services and prices) can be distributed in the Waku network itself in a special shard or content topic on which service nodes broadcast and clients listen. Such dedicated information channels may be seen as system features, and therefore provide free Store queries and Filter subscriptions for clients.

Price Negotiation

Multi-Step Price Negotiation

A more involved scheme for price negotiation would involve a multi-step process, where a client can make a counter-offer, which the server then accepts or rejects. The negotiation is then repeated for up to a certain number of steps. The server and the client may optionally commit to the agreed upon price for a certain number of future request to amortize the cost of price negotiation across multiple requests. A related potential research direction is whether servers should be able to offer zero fees to attract clients. Such scheme should be additionally protected against abuse, and we should ensure that protection measures preserver clients’ privacy.

Payment

Bulk Payments

Bulk payments optimize the one-transaction-per-request scheme by allowing:

- The client to pay once for

Nfuture requests. - The server to record the client’s balance.

- The server to decrement the client’s balance while serving each request.

In this setup, the client effectively extends a line of credit to the server, which the server repays by providing the requested service.

The main advantage of bulk payments is better scalability, as transaction costs are spread out across multiple requests. However, the drawback is the additional risk to the client: if the server defaults on its promise of service provision, the client stands to lose more money than in the one-transaction-per-request scenario.

Reputation system becomes more important in the case of bulk payments. It may be possible to develop a cryptographic scheme (sometimes referred to as “credentials”) to establish a link between service performance and value transfer to the server.

Lower-Latency L2 Proof-of-Payment with Preconfirmations

Preconfirmations are a trending topic in L2 R&D that further reduces payment latency.

On L2, the definition of a “confirmed” transaction is more complex than on L1. Multiple actors—such as sequencers and provers—participate in L2 transaction processing. The core idea behind preconfirmations is that parties responsible for transaction processing issue signed promises of future transaction inclusion. Under a weaker trust model, such promises can signify transaction confirmations to servers that choose to trust preconfirmation issuers.

In the context of Waku, preconfirmations or similar add-ons in L2 protocols mean lower latencies and better user experience. However, changes in trust assumptions should also be considered.

Gasless Payments

To further reduce the barrier to entry for clients, gasless payment schemes (e.g. involveing paymasters) will be considered.

Reputation and Service Quality Assessment

Global Reputation

Global reputation allows clients to not only accumulate knowledge about server’s behavior and performance, but also to share this information, additionally protecting new clients. A central trusted reputation registry, while technically possible, would be a central point of failure (or censorship). Decentralized solutions for global reputation would be preferable (such as Eigentrust and Unirep).

Mutually Trusted Escrow for Service Quality Assessment

A server and a client may agree on a mutually trusted party (“escrow”) for service quality assessment. The escrow server would verify the server’s performance and report the results to the client. In the context of Lightpush, the escrow server would check whether a message has been relayed. It remains to be seen whether an escrow-based scheme is justified for lower-value payments expected in Waku light protocols.

Provable Services

Certain services can be verified cryptographically by the client. A toy example is revealing a preimage of a given hash value. For such services, a trustless verification procedure can be constructed. However, real-world scenarios for the usage of such services may be limited.

Privacy and Anonymity

The protocol will be improved to ensure stronger privacy guarantees for the client. in particular, zero-knowledge notes may be used to remove linkability between the client’s IP address and their wallet address.

Waku Sustainability and Service Incentivization

The long-term goal of Waku is to become financially sustainable. Service incentivization may be a step in that direction.

Broadly speaking, there are two ways to capture value from Waku protocols:

- Redirect part of the value flow (a “tax” on every transaction); or

- Link the functioning of the Waku protocol to the value accrual of a specific asset.

We will explore these and potentially other options to ensure Waku’s long-term sustainability.

Conclusion

This post introduces incentivized Lightpush as the first Waku light protocol to be incentivized. We describe the proposed MVP architecture and explain our design choices. We also outline directions for future work and discuss light protocol incentivization in the broader context of Waku’s overall sustainability.

Comments and suggestions are appreciated!

UPD (2024-09-19)

Thank you everyone for your insightful comments!

I’ve updated the post to reflect some discussion items, in particular:

- specified the goals of the MVP more clearly;

- suggested using Store (and not Filter) for service quality assessment;

- added more post-MVP research directions including privacy and anonymity.

The next steps will be:

- update the specification to reflect the choice of Lightpush as the first incentivized service;

- start working on the implementation.

Thanks once again for the discussion!