Hi, I was directed here for more questions about Carnot.

So far it seems plausible. At first I didn’t think it was logn but was nlogn on a per-node basis, but on further inspection I see why it’s logn. Now I’m looking at the edge cases. I typically start with the CFT scenario before looking at the BFT cases, and my first question with that is:

What happens when a top level parent’s entire committee takes a big old liveness dump?

Let’s say it can’t meet quorum. The entire half branch of the tree is dropped and the network would halt. You can’t even do a view change without a GST rule-based reshuffle, and even then the entire network would be unlikely to even have 51% let alone 2/3rds required for a decision. I notice that under VII.-E (Safety failure analysis) there’s a description of why this would be unlikely to cause a safety issue, and I agree, but it doesn’t address a network halt scenario, does it? Are you able to do view changes without consensus? I’m very unclear about timeouts, plz halp!

If this is answered in the paper, apologies, sometimes it’s difficult to keep everything straight after first reading.

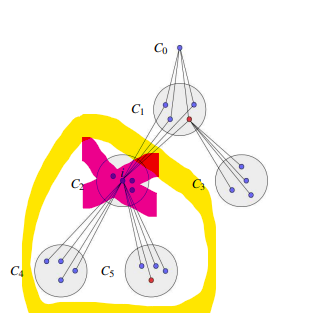

Next question is about decision latency, aka time-to-finality. I propose a decision (typically a block in a chain but in honesty is could be literally anything as this is unmarried to data structure), and each committee from the leaf onward proposes a decision (either colored or accept/reject). The parents then vote if the QC reached quorum etc etc. Each “round” takes up to some “round timeout” there will be at most log(n) timeouts for each decision, and so if timeout is, say, 1 second, and you have 1024 nodes, you’d need 10 seconds for each block to come to decision. Not bad, better than Eth2 by far. But not great either if you’re looking for responsiveness. Maybe you’re not. I know Phil Daian isn’t a fa of low-latency protocols. Either way, in a globally distributed production environment this will depart from “web page” speed as the network grows, no? Is that not a design goal?